Multimodal GenAI in 2025: Proven Strategies and Emerging Trends You Can Use

Multimodal GenAI is getting a lot of attention. It can work with text, images, and audio at the same time. As technology grows, this skill matters more than ever.

This guide will help you use multimodal GenAI tools in your work. You will learn how to fix real problems. This could mean better customer service or faster health care.

This guide explains how multimodal GenAI works. It shows real examples. And it shares the latest trends.

Understanding Multimodal GenAI

Multimodal GenAI refers to systems capable of processing and integrating multiple types of data, such as text, images, and audio. This capability allows for a more nuanced interpretation of complex information.

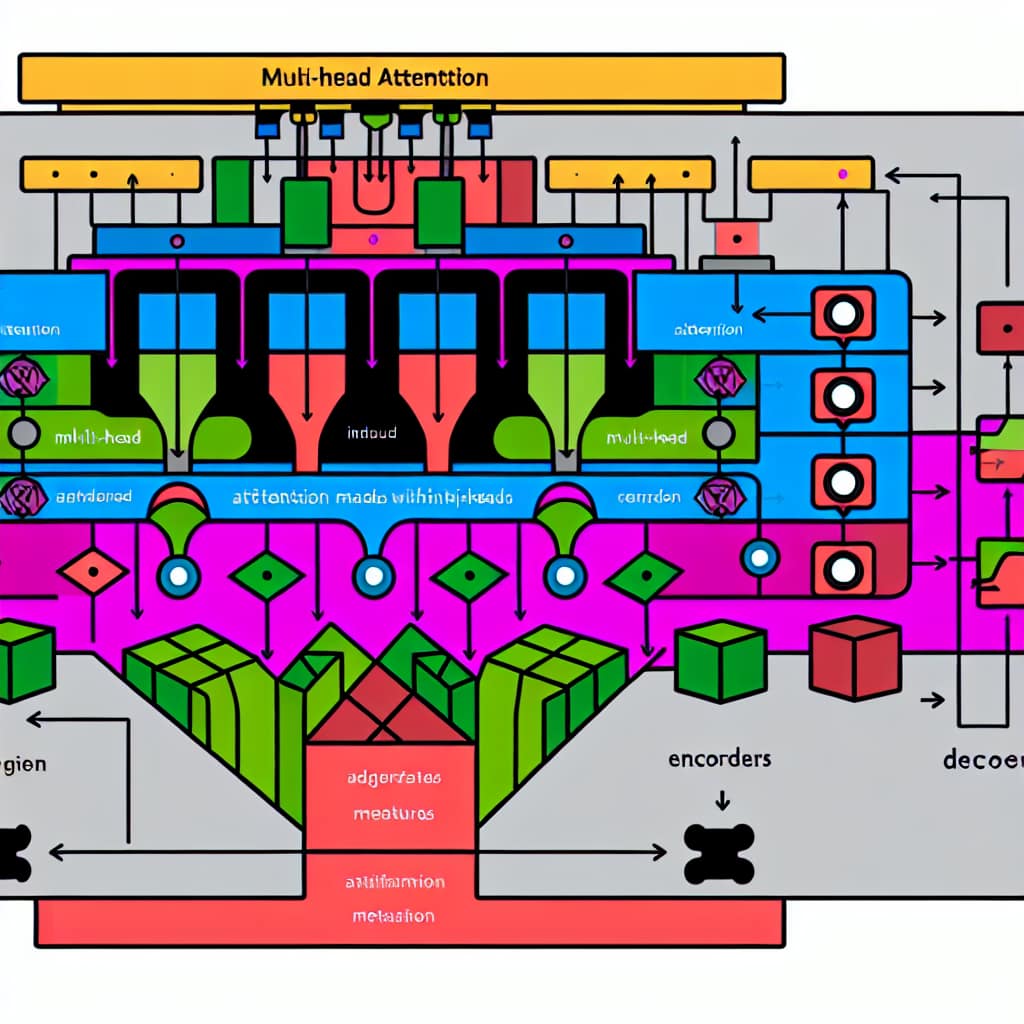

The integration is facilitated by advanced machine learning frameworks, notably transformers and neural networks, which are adept at understanding diverse data forms.

Learn more from leading research on multimodal AI on arXiv.

Core Building Blocks

Most multimodal systems share a similar structure, even if the details vary by model family. Understanding the building blocks helps you plan better use cases and avoid common mistakes.

Encoders for each modality

An encoder converts raw input into a numeric representation. Text encoders process tokens. Image encoders process pixels or patches. Audio encoders process waveforms or spectrograms.

A shared representation space

The system needs a way to align information across modalities. Many approaches map different inputs into a shared space. This helps the model connect concepts across text, images, and audio.

Fusion or cross-attention

Fusion is how the model combines information. Some models merge embeddings early. Others use cross-attention so text can attend to image regions, or audio segments can attend to text tokens.

A generator or decoder

If the goal is generation, the model needs a decoder. It may generate text, images, audio, or a mix. In many products, text is still the most common output, even when inputs include images or audio.

Applications in GenAI Images

GenAI images leverage AI algorithms to create visuals that are indistinguishably realistic. This technology is changing industries like entertainment and marketing, where visual content is important.

AI can manipulate pixel-level data to generate new, high-quality images from existing datasets. This can cut time and cost while improving creative flexibility.

Applications in GenAI Audio

GenAI audio is transforming how sound is produced and manipulated. By analyzing audio data, AI systems can generate realistic speech, music, and sound effects that support immersive experiences in gaming and virtual reality.

AI-driven audio generation can also aid accessibility. It can provide enhanced tools for individuals with disabilities, including better narration, transcription, and voice interfaces.

Evaluation and Quality Checks

Multimodal GenAI can look impressive, but quality control is harder than it seems. Teams often test only the best-case paths. Then the system struggles in production because real users bring messy inputs.

Start with input robustness tests. Try blurry images, low light photos, and noisy audio. Keep a small labeled set that matches your real use case so you can measure quality reliably.

Add safety checks where relevant. For higher-risk workflows, use lightweight human review, especially when outputs impact users directly.

Emerging Trends in Multimodal GenAI

Looking ahead, multimodal GenAI shows promise in refining natural language processing and improving human-computer interactions.

We expect to see hybrid models that unite vision, speech, and text understanding. These models can unlock new applications in robotics and autonomous systems.

Ethical AI considerations will grow. It is essential to develop frameworks that ensure systems are transparent and fair.

Real-World Examples

An excellent real-world demonstration is Google’s DeepMind, which combines various data forms to advance healthcare diagnostics.

Similarly, Adobe Firefly empowers designers by integrating text descriptions with image generation and streamlining creative workflows.

These examples show how multimodal GenAI is both innovative and practical. It solves specific needs and improves speed across real production work.

Deployment and MLOps for Multimodal Systems

Multimodal GenAI can be heavier to run than text-only systems. It often has higher compute cost, more input variability, and tougher quality control needs.

Define input limits such as max image size, supported formats, and audio duration. Monitor latency, error rates, and cost per request. Keep logs safe and avoid storing sensitive user data.

For staged rollouts, use gradual traffic ramps and a fallback path. This reduces risk and makes issues easier to isolate.

Final Thoughts

This integrated approach represents a breakthrough in diverse data form integration. As industries adopt these technologies, they transform how information is processed and applied, improving both efficiency and innovation.

Staying informed on these trends is crucial for professionals in the AI and data science fields. Explore more about AI and data science on GenAI Blogs, and consider subscribing to receive the latest updates on digital advancements.

Practical Applications for Data Teams

These integrated systems are becoming essential tools for data engineering and machine learning teams. When implemented thoughtfully, multimodal GenAI enables organizations to create more intelligent pipelines that process diverse data streams simultaneously.

For teams looking to adopt multimodal GenAI, starting with a single use case, whether image recognition, audio processing, or text analysis, provides a manageable entry point.

Real-world implementations show that adoption often requires investment in infrastructure, training, and iterative refinement. As multimodal GenAI continues to mature, the technology becomes increasingly accessible to organizations of all sizes.

Teams that pioneer multimodal GenAI implementations today can build durable advantages as these technologies become industry standards.

FAQs

What is multimodal GenAI?

Multimodal GenAI is a type of artificial intelligence that processes and integrates multiple kinds of data inputs, such as text, visual, and audio data, to provide a more comprehensive understanding.

How does GenAI enhance multimedia content?

GenAI enhances multimedia content by generating visually realistic images and producing high-quality audio content from data, customizing and improving user experience across various applications.

What are the challenges of multimodal GenAI?

Major challenges include managing the complexity of integrating diverse data forms, maintaining data consistency, and addressing ethical concerns such as privacy and bias.

Author Bio

Sudhir Dubey is an AI researcher and data science educator focused on practical AI deployment and fine-tuning strategies for enterprise use cases.