How Small LLMs Are Supercharging Edge AI in 2025 (And Why It Matters)

Small LLMs for edge AI deployment are quietly flipping the script. No more waiting on cloud servers or leaking data into the digital void. Now, your device, yes, the one in your hand—can process language fast, right there on the spot.

When a developer ran a compact LLM on a Raspberry Pi? It outpaced a full cloud setup. Instant replies. Zero internet. That little board handled tasks like a caffeinated coder on a deadline. We’re talking smooth, secure, and straight-up impressive.

- Why Small LLMs Matter Now

- Real-World Use Cases That Actually Work

- What’s Coming Next (Spoiler: It’s Wild)

Why Small LLMs Matter Now

Let’s be real. Cloud-based AI can be slow, clunky, and just plain risky. Need a voice assistant to reply fast? Or a car to make a decision on the fly? The cloud’s too far away. That’s where small LLMs shine.

By running right on your device, these models skip the internet traffic. That means faster results and way better privacy. No more sending your questions or commands to some mystery server in another country.

And hey, data stays local. So if you’re in healthcare or finance, that’s a win. No need to stress about leaks or shady third-party access. It’s just you, your data, and a smart device that knows what to do with it.

Real-World Use Cases That Actually Work

These aren’t just theories or lab tests. Small LLMs are already doing real work. Wearable fitness trackers now offer personalized coaching right from your wrist. No cloud, no lag. Just real-time tips like, “Fix your posture,” or “Pick up the pace.”

Factory floor? Picture this: a tiny LLM analyzing motor sounds and temperature spikes, predicting breakdowns before they even happen. It’s like a seasoned mechanic tucked inside your robot arm, minus the wrench.

And in finance? It’s already making waves. Local LLMs sift through transaction data right on the device. No lag, no cloud detour. If something sketchy pops up, boom, it’s flagged in real-time.

That’s security with speed baked in.

What’s Coming Next (Spoiler: It’s Wild)

Researchers are shrinking these models like laundry on hot. Smaller files, same smarts. Pretty soon, your earbuds or glasses might house a full AI assistant. Imagine real-time translations during a convo. No delay. No awkward pauses.

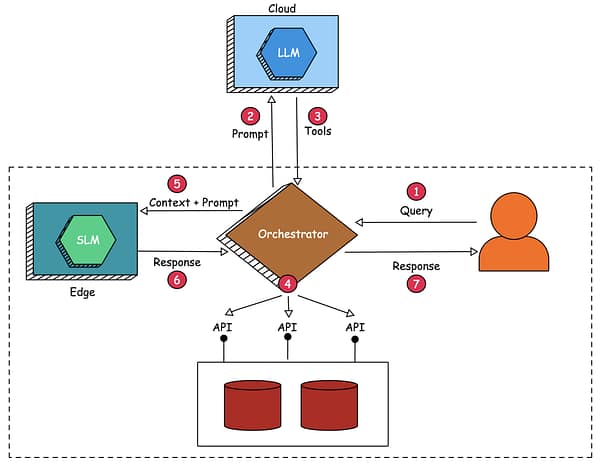

We’ll also see more smart handoffs. The device handles the quick stuff. If it gets too complex? Then it sends it to the cloud.

It’s like your phone calling in backup but only when it needs to.

Curious about the nuts and bolts? Check out this on-device LLM paper. It’s packed with insights on what’s working and what’s not.

Also, I dig into all this in the GenAI section right here on my site. Dive in if you’re into edge tech, tiny models, or just nerdy innovation.

Frequently Asked Questions

What exactly is a small LLM?

It’s a scaled-down language model that runs directly on your device. No cloud needed.

Why use LLMs at the edge?

You get faster results, better privacy, and less dependency on constant internet access.

Who’s using them right now?

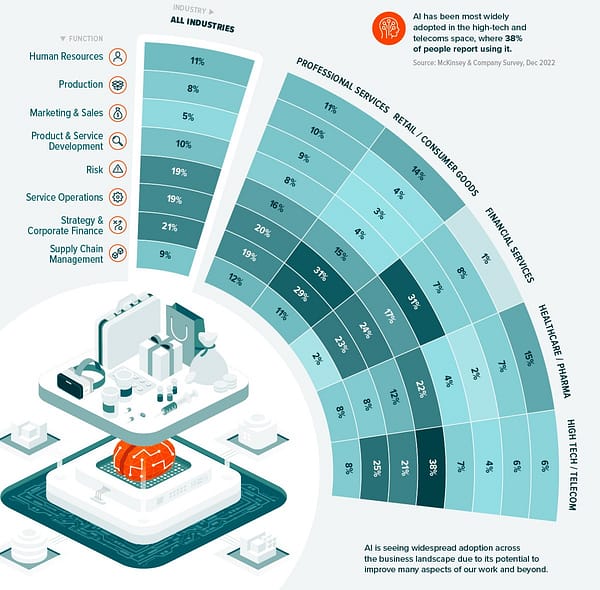

Everyone from fitness startups to car manufacturers to hospitals. It’s a wide field.

Are these models accurate?

Very. Even though they’re smaller, they’re optimized to perform well in real-world situations.

Is cloud AI going away?

Nope. It’ll still be around. But edge AI is becoming the first line of action.

Final Thoughts

Small LLMs are flipping AI on its head. They’re local, fast, and way more private. Whether you’re building smart gear or running a business, they’re worth exploring.

Want more? Head to the GenAI category here for fresh takes and updates.