AI and Ethical Considerations: 9 Practical Principles for Responsible AI

AI and Ethical Considerations sounds like a big topic. It is. But you can make it simple if you treat AI like any other system that affects people. If it can shape someone’s opportunity, safety, money, or dignity, it needs rules. It needs checks. It needs ownership.

Right now, AI is used in hiring, lending, healthcare, education, customer support, marketing, and search. Many tools feel harmless because they are fast and helpful. Yet small errors can scale. A weak data choice can turn into a biased outcome for thousands of users.

This guide is a practical playbook. It is written for builders, marketers, founders, and editors who use AI in real workflows. No theory-first approach. Just what to watch, what to document, and how to reduce harm.

What ethical AI really means

Ethical AI is not a single feature. It is a set of choices across the AI lifecycle. It starts before training. It continues after launch.

In plain words, ethical AI means:

- The system is useful and safe for people.

- It does not unfairly harm groups.

- It respects privacy and consent.

- It can be explained when it matters.

- Humans remain accountable for outcomes.

When teams ignore AI and Ethical Considerations, they often get the same story. The model looked accurate in a demo. Then reality hit. Edge cases appeared. Users lost trust. Support tickets grew. Regulators asked questions.

Why AI ethics is now a business requirement

Ethics is not only about values. It is also about risk and trust. Even small teams should treat ethical AI as a core quality bar, like security or uptime.

Here is why:

- Scale: AI can affect many people fast.

- Opacity: Some models are hard to explain.

- Data risk: Training data can include sensitive details.

- Brand risk: One public failure can damage years of work.

- Legal risk: Rules around privacy and AI are expanding.

If you publish content or build digital products, this also connects to quality systems. For example, when you rely on AI for content, you still own the final output. Helpful, original, and accurate content matters more than volume.

Related reading on this site: Context-Aware AI for Business.

The 9 practical principles (use these as your checklist)

This is the heart of AI and Ethical Considerations. These nine principles are easy to audit and easy to assign.

1) Define the human impact before you build

Start with a simple question: Who can this system affect, and how?

Create a short “impact note” that answers:

- What decision does the AI influence?

- Who is the user and who is the subject?

- What happens if it is wrong?

- What groups might be impacted differently?

If the impact is high (health, money, education, legal, safety), raise the bar. Add review. Add monitoring. Add stronger fallback paths.

2) Use data you can defend

Most ethical failures start in the dataset. Teams often do not know what is inside their data, where it came from, or what it represents.

Minimum standard:

- Document the source of each dataset.

- Document consent and permitted use.

- Track time range and geography.

- Check for missing groups and imbalance.

- Remove sensitive data when not needed.

In practice, “better data” usually beats “bigger data.” It also supports compliance work later.

3) Measure bias, then decide what “fair” means

Bias is not only rude language or discrimination. Bias can appear as different error rates across groups. It can appear as unequal access to benefits.

Fairness is also contextual. A hiring tool and a spam filter are not the same problem. You need to pick fairness goals that match the domain.

Practical bias checks:

- Compare false positives and false negatives by group.

- Review top features that drive outcomes.

- Test with counterfactual examples (change one attribute, keep others).

- Audit performance after launch, not only in the lab.

This is a core part of AI and Ethical Considerations because fairness problems often hide behind good average accuracy.

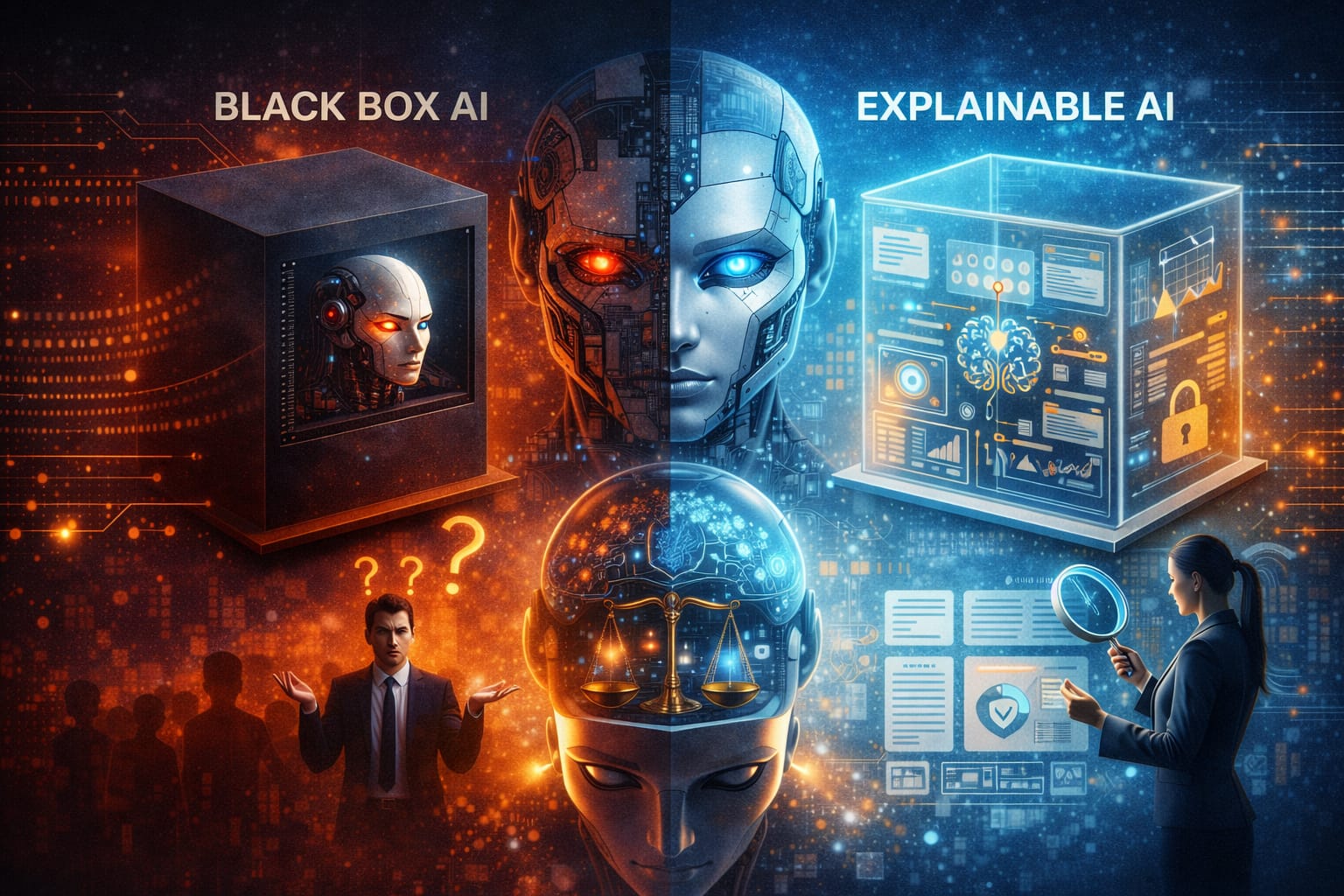

4) Prefer transparency over mystery

Users trust systems that behave predictably. Teams debug systems they can explain. In many products, you do not need a perfect explanation. You need a useful one.

Examples of good transparency:

- Show what the model considered (high-level features).

- Show confidence or uncertainty in plain language.

- Show the key reason for a decision when it matters.

- Provide an appeal or correction path.

If you cannot explain a high-stakes decision, you should not automate it fully. At minimum, keep a human in the loop.

5) Protect privacy as a default

AI systems often pull data from many places. That creates risk. Even if you never “share data,” you can still leak it through logs, prompts, embeddings, or training artifacts.

Privacy basics that work in real teams:

- Collect the minimum data needed.

- Limit retention time for sensitive inputs.

- Mask personal data in logs.

- Separate training data from production user data unless consent is clear.

- Review third-party AI vendor data policies.

When you handle user prompts, treat them like support tickets or emails. They can contain secrets.

6) Keep humans accountable (no “the model did it”)

One of the simplest rules in AI and Ethical Considerations is this: Humans own the outcomes. Period.

Accountability becomes real when you assign roles:

- Model owner: accountable for performance and updates.

- Data owner: accountable for data quality and rights.

- Risk owner: accountable for approvals and escalation.

- Support owner: accountable for user feedback loops.

When accountability is vague, harm gets ignored. When it is clear, issues get fixed faster.

7) Build safety rails for misuse

AI can be used in ways you did not plan. Some misuse is accidental. Some is deliberate. This is why guardrails matter.

Examples of useful safety rails:

- Rate limits and abuse detection.

- Content filters for risky categories.

- Refusal patterns for harmful requests.

- Human review for sensitive outputs.

- Clear user policies and enforcement.

Guardrails should be tested like any other feature. Run red-team style tests. Track failure modes. Improve them over time.

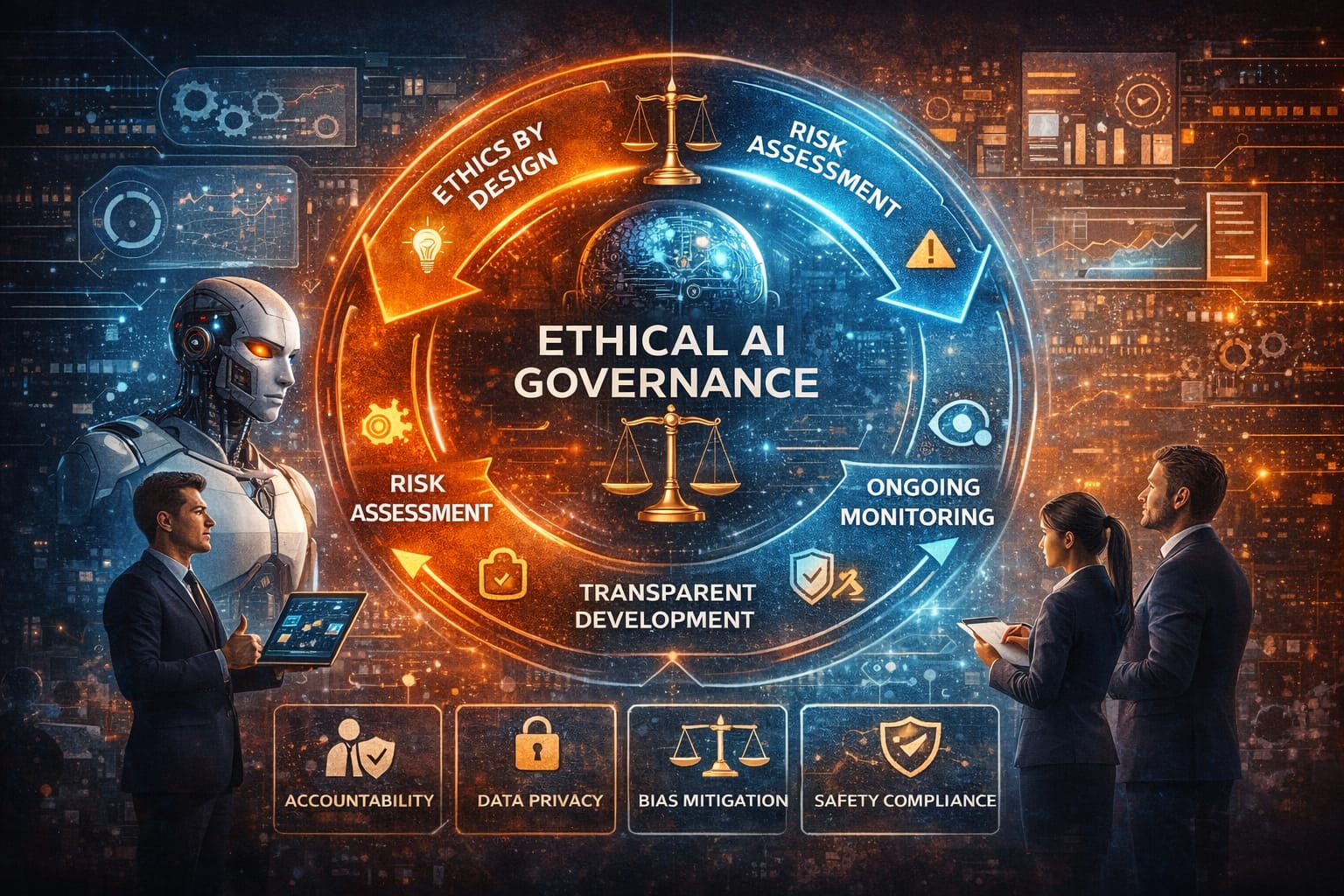

8) Monitor after launch (ethics is not a one-time task)

Many teams do one audit, then stop. That does not work. Data changes. User behavior changes. The world changes.

Set up monitoring that is simple and actionable:

- Track drift in input data.

- Track key outcome metrics weekly.

- Track complaints and appeals.

- Sample outputs for quality review.

- Log model version and major config changes.

Monitoring is where ethical intent becomes ethical reality.

9) Use a governance framework that your team can follow

Governance does not need to be heavy. It needs to be consistent. Two respected references are the OECD AI Principles and the NIST AI Risk Management Framework.

Use these as anchors:

- OECD: human rights, fairness, transparency, accountability, robustness.

- NIST AI RMF: govern, map, measure, manage across the lifecycle.

External references (1 dofollow link): NIST AI Risk Management Framework.

Related reading on this site: Retrieval-Augmented Generation (RAG) in production.

Ethical AI for content teams and publishers

If you publish content, AI and Ethical Considerations show up in a very practical way. AI can speed up drafts, but it can also produce errors, generic writing, and false claims. It can also repeat bias from training data.

Practical standards for publishers:

- Use AI to assist, not to replace expertise.

- Fact-check claims that affect decisions.

- Add first-hand context when you have it.

- Avoid medical or legal claims without sources.

- Do not publish made-up statistics or fake quotes.

For workflow ideas, see: Small AI Agents for Solopreneurs (2026).

A simple “ethical AI” workflow you can copy

Here is a lightweight process that works for small teams. It also scales.

- Plan: Write the impact note (who is affected and how).

- Data: Document sources, consent, and gaps.

- Build: Add safety rails and clear ownership.

- Test: Bias checks, abuse tests, and edge cases.

- Launch: Start with limited exposure if risk is high.

- Monitor: Drift, complaints, and regular output sampling.

- Improve: Patch issues and update documentation.

This workflow is not fancy. That is the point. It is doable. It is trackable. It makes AI and Ethical Considerations part of your normal work, not a side project.

Quick FAQ

Is ethical AI only for big companies?

No. Small teams often move faster and can build better habits early. Start with data documentation, basic bias checks, and a clear appeal path.

Do we need explainable AI for every product?

Not always. But if decisions affect money, access, health, safety, or rights, you need explainability or a human review layer.

What is the single best first step?

Create an impact note and assign an owner. That alone prevents many avoidable mistakes.

Conclusion

AI and Ethical Considerations are not about slowing progress. They are about keeping progress safe, trusted, and sustainable. If you define impact early, use defendable data, measure fairness, stay transparent, protect privacy, and monitor after launch, you will avoid most painful failures.

Ethical AI is not perfect AI. It is responsible AI.