Hybrid AI Architecture With Small Language Models

Hybrid AI architecture with small language models is the pattern most enterprise teams are moving to in 2026. It is not hype. It is a response to cost, speed, risk, and control.

In a hybrid setup, small language models (SLMs) do most of the work. They handle routine tasks that happen all day. Large language models (LLMs) only step in when you truly need deep reasoning or open-ended writing.

This approach is simple in idea but powerful in practice. It turns GenAI into a system you can run, measure, and govern.

If you are already investing in enterprise AI platforms, start here first: enterprise AI strategy. Then use this blueprint to design the stack.

Hybrid AI architecture with small language models is replacing LLM-only systems

LLM-only designs break in production. They can work in demos. They can fail in the real world.

Here are the common failure points.

- Cost spikes: Every prompt becomes expensive. The bill moves with usage.

- Slow responses: Even simple tasks take too long at scale.

- Weak control: Output can drift. Tone and policy can break.

- Hard audits: Proving what happened is tough without strong logs.

- Risk exposure: Sensitive inputs can leak into places they should not.

Hybrid AI architecture with small language models fixes this by adding structure. You route tasks. You add checks. You log decisions. You keep LLM calls for the cases that need them.

What a hybrid enterprise AI stack looks like

A good hybrid stack has clear layers. Each layer has one job.

- Input layer: Validate, clean, and classify the user request.

- SLM layer: Do routing, extraction, tagging, and short answers.

- Retrieval layer: Pull approved facts from enterprise sources.

- LLM layer: Write or reason only when needed.

- Control layer: Enforce policy, safety, and cost caps.

- Observability layer: Log prompts, decisions, and outcomes.

For more system thinking on this, you can also review AI architecture and align it with your AI program goals.

Why SLMs should handle most enterprise requests

Hybrid AI architecture with small language models works because most enterprise tasks are not creative writing tasks.

Most enterprise tasks are:

- Classify a ticket

- Extract fields from a form

- Detect intent

- Summarize a short note

- Route a request to the right workflow

- Check if content matches policy rules

These are narrow tasks. They repeat. They have clear labels. This is where SLMs shine.

SLMs are faster. They are cheaper. They are easier to fine-tune for your domain. They are also easier to run in controlled environments.

If you want a deeper SLM foundation and model sizing logic, connect this post to your existing work on small language models.

Routing is the core skill in hybrid AI

Routing means you decide what model should handle the request. You do this before you generate a long answer.

Hybrid AI architecture with small language models uses routing to reduce cost and risk. It also improves quality. The user gets the right level of reasoning for the job.

A clean routing design usually follows these steps.

- Detect intent: What is the user trying to do?

- Check policy: Is the request allowed? What data can be used?

- Choose path: SLM-only, Retrieval + SLM, or Retrieval + LLM.

- Apply guardrails: Enforce style, tone, and compliance rules.

- Log and score: Store inputs, decisions, and output metrics.

This is how you stop LLMs from becoming the default hammer.

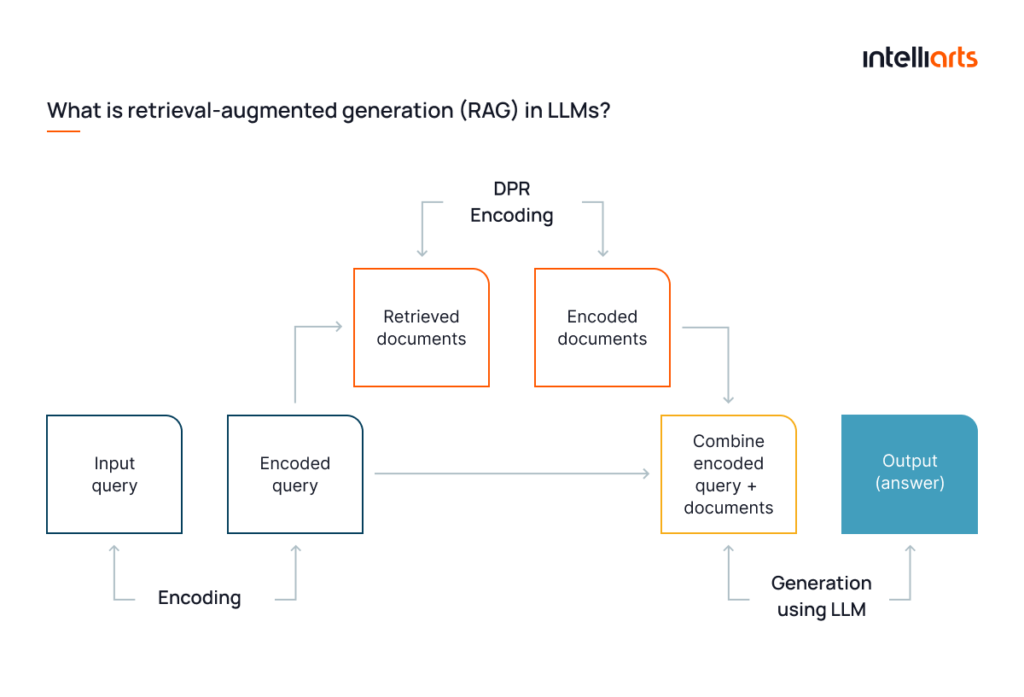

RAG is how hybrid systems stay factual

Enterprises cannot rely on model memory for policy, product, or customer details. Facts live in systems of record.

Retrieval-augmented generation (RAG) solves this by pulling approved context. Then the model answers using that context.

In hybrid AI architecture with small language models, RAG helps in two ways.

- SLM-first answers: The SLM can answer short, factual prompts using retrieved text.

- LLM escalation: If the task needs narrative or multi-step reasoning, the LLM uses the same retrieved facts.

This pattern reduces hallucinations. It also makes audits easier because you can store the retrieved sources used for the answer.

Guardrails and governance must be part of the architecture

Hybrid AI architecture with small language models is not only about models. It is about control.

Governance means:

- Who can ask what

- What data can be used

- How outputs are checked

- How decisions are logged

- How incidents are handled

For a strong reference point on trustworthy AI risk management, NIST provides the AI Risk Management Framework, which many enterprise teams use to shape policy and oversight. NIST AI Risk Management Framework. :contentReference[oaicite:0]{index=0}

Keep your guardrails practical. Do not try to boil the ocean. Start with clear policies and tight logs.

Observability: log the decision path, not only the output

Most teams only log the final answer. That is not enough.

You should log:

- User request and metadata

- Routing decision and confidence score

- Retrieval queries and document IDs

- Model version and prompt template ID

- Safety checks and policy results

- Cost per request and latency

This is how hybrid AI architecture with small language models becomes manageable. You can debug issues. You can prove controls. You can improve the system with evidence.

Cost control: set budgets at the routing layer

Cost control is easiest when you do it early.

Use these simple rules:

- SLM by default: Most requests never touch an LLM.

- Escalate only on need: Low confidence or complex tasks go to the LLM.

- Cap tokens: Limit output size based on the task class.

- Cache: Cache repeated answers for internal FAQs.

Hybrid AI architecture with small language models gives you levers you do not get in LLM-only systems.

7 patterns you can deploy now

Below are practical patterns that match how real enterprise teams build. Each pattern fits hybrid AI architecture with small language models.

1) SLM router + tool calls

The SLM classifies intent and calls tools. It can trigger search, ticket creation, or summaries. It keeps LLM use near zero.

2) SLM policy gate before any generation

The SLM checks policy rules first. If the request breaks policy, it stops. This prevents risky prompts from reaching an LLM.

3) RAG + SLM answer for internal knowledge

Use retrieval to pull approved snippets. The SLM answers in short form. This is fast and low cost.

4) RAG + LLM for long form synthesis

Use the same retrieval layer. Escalate to the LLM only for reports, plans, or multi-step reasoning.

5) Two-pass output checking

First pass: generate. Second pass: an SLM checks for policy, tone, and data leaks. This reduces bad outputs.

6) Human handoff on high risk classes

When the request is risky or sensitive, the system drafts but requires approval before it is sent.

7) Continuous eval with shadow traffic

Run the new SLM or new prompt in the background. Compare results. Promote only when metrics improve.

How to start in 30 days

If you want hybrid AI architecture with small language models to work, start with one workflow. Do not start with ten.

- Pick one process: Example: IT helpdesk triage.

- Define labels: Intent classes and outcomes.

- Deploy an SLM router: Route and log everything.

- Add retrieval: Use approved docs only.

- Add one escalation rule: Low confidence goes to LLM.

- Add checks: Policy check and PII check.

- Measure: Accuracy, latency, and cost per request.

This path is safe. It is measurable. It scales.

Key takeaways

- Hybrid AI architecture with small language models is a production pattern, not a theory.

- SLMs should handle routing, extraction, and repeatable tasks.

- LLMs should be used only when they add clear value.

- RAG keeps answers tied to enterprise facts.

- Governance and logs must be part of the design.