Data Cleaning & Preprocessing: Top 5 Advanced Techniques for Quality Improvement in 2025

In the rapidly evolving field of Data Science, Data Cleaning and Data Preprocessing have become crucial components for ensuring data quality and accuracy. These processes are essential for AI professionals and data enthusiasts who face the perpetual challenge of handling imperfect data. As we advance into 2025, leveraging advanced techniques to improve data quality through effective cleaning and preprocessing will offer competitive advantages in decision-making and predictive analytics. This article explores top strategies and emerging trends, promising to enhance your approach to data management.

Table of Contents

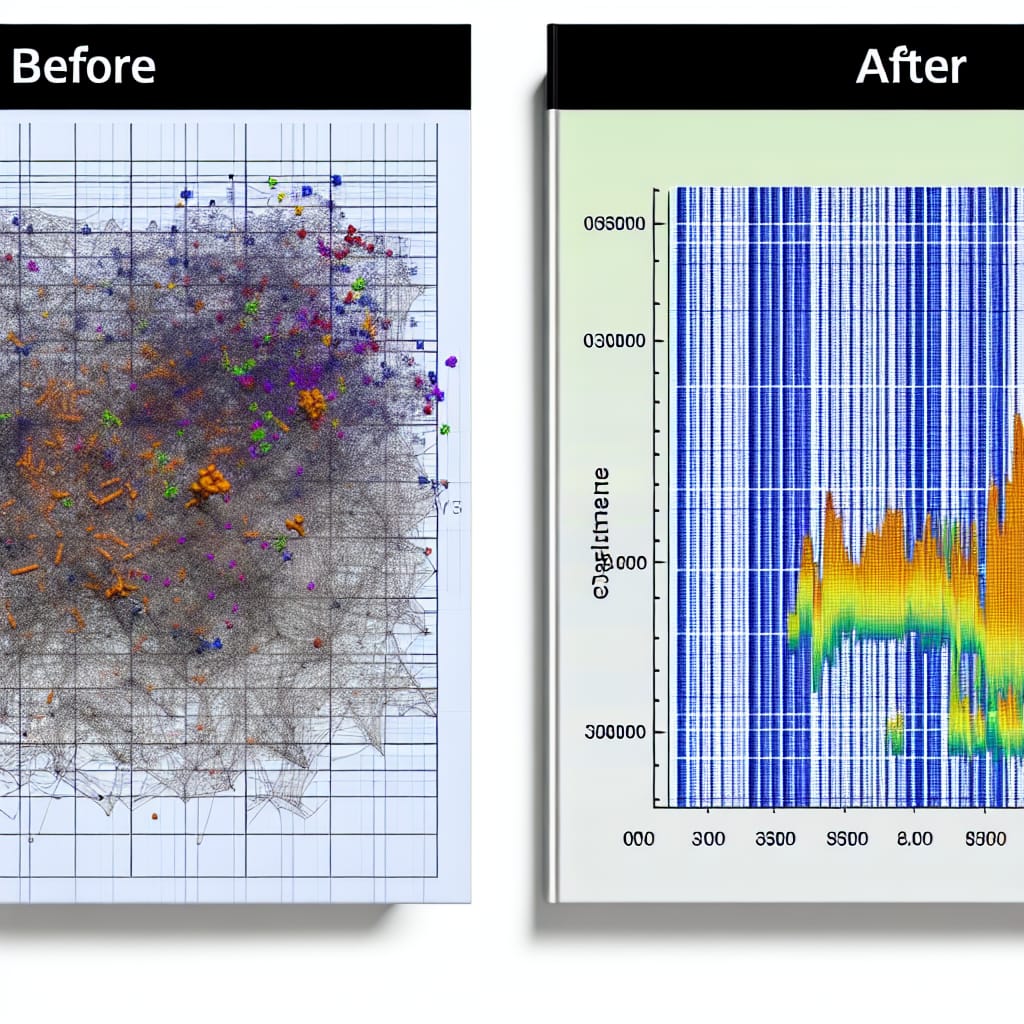

Understanding Data Cleaning and Preprocessing

Data cleaning and data preprocessing serve as foundational stages in the data science pipeline. Data cleaning involves identifying and rectifying errors, duplicates, and inconsistencies in datasets. Meanwhile, data preprocessing prepares the raw data for analytical processing, which may involve normalization, transformation, and feature engineering. Both processes are designed to enhance data quality, and efficiency and enable accurate model predictions.

Advanced Techniques for Data Cleaning

As datasets grow larger and more complex, advanced techniques for data cleaning are essential. Here are the top methods in 2025:

Automated Cleaning Tools

Tools like OpenRefine and Trifacta rely on machine learning algorithms to automate the data cleaning process, reducing human error and saving time. These tools can detect anomalies and suggest corrections based on learned patterns.

Use of Machine Learning for Anomaly Detection

Machine learning models such as Isolation Forests or DBSCAN (Density-Based Spatial Clustering of Applications with Noise) help identify anomalies in datasets, offering a sophisticated approach to data validation.

Text Data Cleaning with NLP

Natural Language Processing (NLP) techniques like tokenization and stemming improve the quality of textual data, making it suitable for analysis. Modern NLP models also handle variations in language and syntax efficiently.

Best Practices in Data Preprocessing

Feature Engineering

Effective feature engineering involves creating meaningful variables that improve model performance. Techniques such as polynomial features, interaction terms, or domain-specific transformations unlock hidden patterns in the data.

Scaling and Normalization

Scaling methods like Min-Max Scaling and Z-Score Standardization ensure that algorithm assumptions are met. Normalization enhances data stability, especially in models sensitive to input scale variations.

Dimensionality Reduction

Principle Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) reduce feature space, helping to eliminate noise and focus on the most informative features of a dataset.

Real-World Examples

In the healthcare industry, data cleaning and preprocessing have transformed patient data analysis. For instance, applying machine learning algorithms to clean electronic health records (EHRs) has improved diagnosis accuracy and patient outcomes.

Retail businesses use advanced data preprocessing to refine customer segmentation. By employing feature engineering, retailers extract valuable insights from purchasing patterns, thus tailoring marketing strategies more effectively.

Future Trends

Moving forward, we expect to see more integration of AI-driven data cleaning solutions, with intelligent systems autonomously correcting data without human intervention. The rise of explainable AI (XAI) will offer transparent processes, aiding in better data preprocessing decisions.

FAQs

What is the difference between data cleaning and data preprocessing?

Data cleaning focuses on fixing errors and correcting data, while data preprocessing involves preparing the cleaned data for further analysis, including transformations and feature extraction.

Why is data preprocessing important in AI?

Data preprocessing ensures that AI models receive high-quality input data, enhancing model accuracy and performance. It plays a vital role in achieving reliable, actionable insights.

Can automated tools replace manual data cleaning?

While automated tools can significantly reduce the manual effort, they are best used to complement human expertise, especially when complex domain knowledge or contextual understanding is required.

What emerging trends should data scientists watch in data cleaning?

Data scientists should keep an eye on machine learning-based data cleaning techniques, edge computing’s role in real-time data processing, and the growing significance of ethical data handling and privacy concerns.

Conclusion

Data Cleaning and Data Preprocessing are indispensable for refining raw data into a powerful tool for analysis and decision-making. As data complexity grows, adopting advanced techniques ensures data quality and reliability. Looking ahead, the integration of AI in these processes promises revolutionary improvements in efficiency and effectiveness. To stay updated on the latest AI and Data Science trends, visit our Data Science Blogs and consider subscribing for regular updates.