10 Essential Secrets of Explainable AI for Ultimate AI Transparency

Introduction

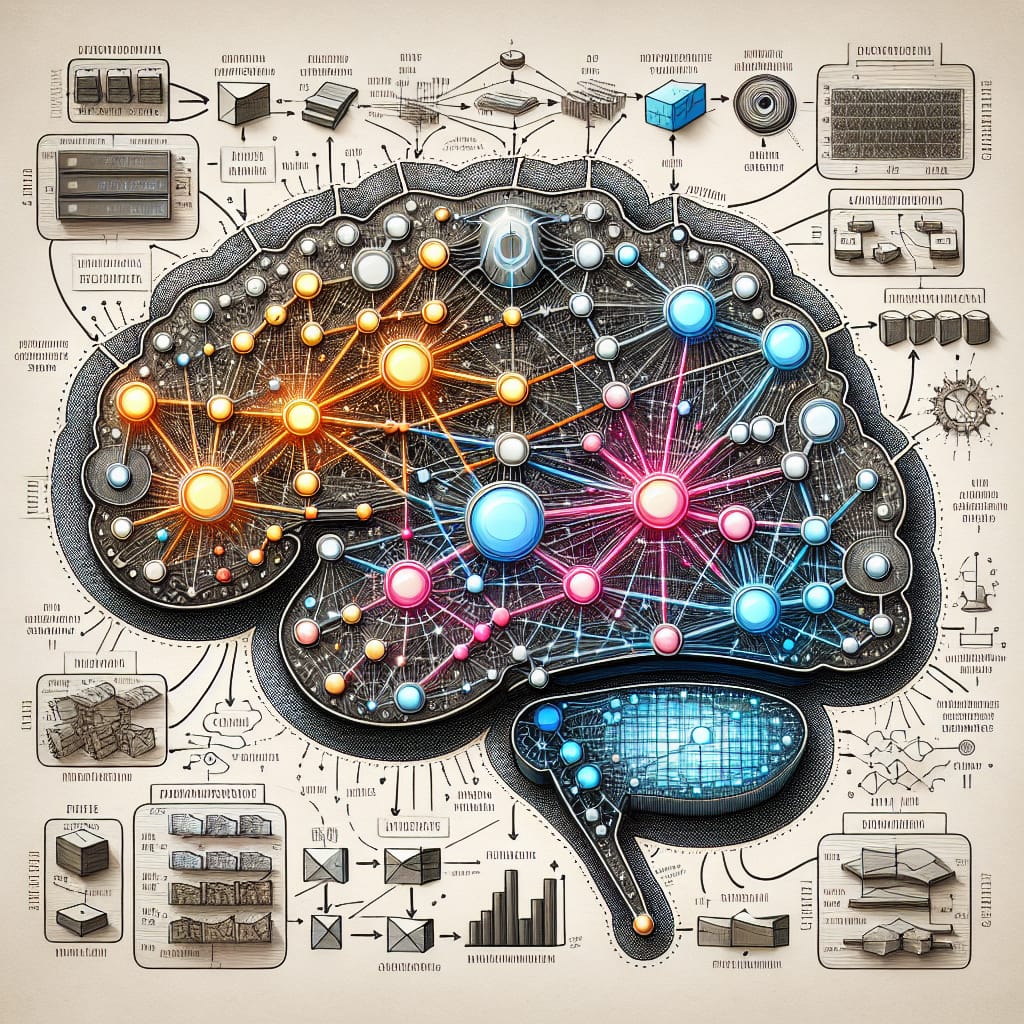

In the rapidly evolving world of artificial intelligence, understanding the inner workings of AI systems is crucial. Explainable AI (XAI) is an important concept that aims to make AI models more transparent and understandable. In this article, we’ll delve into the secrets of explainable AI and explore how it contributes to AI transparency.

Table of Contents

- Understanding Explainable AI

- Benefits of Explainable AI

- Real-world Examples of XAI

- Implementing Explainable AI

- Conclusion

- FAQs

Understanding Explainable AI

Explainable AI (XAI) refers to methods and techniques used to make AI models more interpretable and comprehendible by humans. This involves creating AI that can provide clear and understandable justifications for its decisions. By making AI systems transparent, users can trust and effectively utilize these powerful tools.

Benefits of Explainable AI

1. Building Trust

Explainable AI plays a critical role in establishing trust between AI systems and users. By providing insights into how AI decisions are made, it ensures users can rely on AI-driven outcomes.

2. Enhancing User Engagement

With AI transparency, users can better engage with AI systems by understanding their functionalities. This leads to improved decision-making and greater user satisfaction.

3. Reducing Errors

When AI models offer clear explanations, errors are easier to detect and solve. Thus, XAI helps maintain the accuracy and reliability of AI systems.

4. Complying with Regulations

Data protection regulations increasingly demand AI transparency. Explainable AI enables compliance with these legal requirements, protecting both users and organizations.

Real-world Examples of XAI

Several industries are leveraging XAI to enhance AI transparency:

Healthcare

- AI systems in healthcare can explain diagnosis and treatment options, enabling doctors to provide better patient care.

Finance

- Financial institutions use explainable AI to detect fraudulent activities and provide insights into risk management decisions.

Autonomous Vehicles

- XAI helps in understanding and improving the decision-making processes of self-driving cars, ensuring passenger safety.

Implementing Explainable AI

Integrating XAI involves several strategies:

Model Selection

Choose models that are inherently more interpretable, such as decision trees or rule-based models, where possible.

Post-hoc Explanation

Use techniques like LIME or SHAP to provide explanations after model training, offering insights into model behavior.

User Interface Design

Create dashboards and visual aids that simplify complex AI processes for end-users, making AI outputs more comprehensible.

Conclusion

Explainable AI is essential in today’s AI-driven world. By enhancing AI transparency, XAI fosters trust, ensures compliance, and promotes user engagement. As AI continues to advance, adopting XAI will be crucial for organizations to harness AI’s full potential. Explore our other articles to learn more about innovative AI solutions that can benefit your business.