Essential Insights into Retrieval-Augmented Generation (RAG) in 2025

RAG is the fastest way to make LLM outputs more accurate on your own data without retraining the model. In 2025, it’s no longer an “advanced” pattern. It’s the default architecture for enterprise chatbots, internal copilots, and document Q&A.

Retrieval-Augmented Generation (RAG) matters because it keeps answers grounded, auditable, and current without rebuilding the model every time your data changes.

Quick Definition

Retrieval-Augmented Generation (RAG) is a method where an LLM first retrieves relevant information from your knowledge sources (like documents or databases) and then generates an answer using that retrieved context, often with citations.

- Retriever: finds relevant chunks (usually via embeddings search)

- Generator: the LLM writes the response

- Grounding: citations/links reduce hallucinations and increase trust

Quick RAG checklist: strong chunking, hybrid retrieval, reranking, and evaluation.

TLDR

- Use RAG when your answers must be up-to-date and traceable to your data.

- Most RAG failures come from bad chunking, weak retrieval, or no evaluation.

- In 2025, “good RAG” usually means hybrid retrieval, reranking, and guardrails.

Why RAG matters in 2025

LLMs are strong at language, but they don’t magically know your internal documents, your latest policies, or your private datasets. You can fine-tune, but fine-tuning is slow, expensive, and hard to keep updated. RAG solves this by letting the model “look up” information at answer time.

If you care about accuracy, citations, and operational control, RAG is usually the best first move before touching fine-tuning.

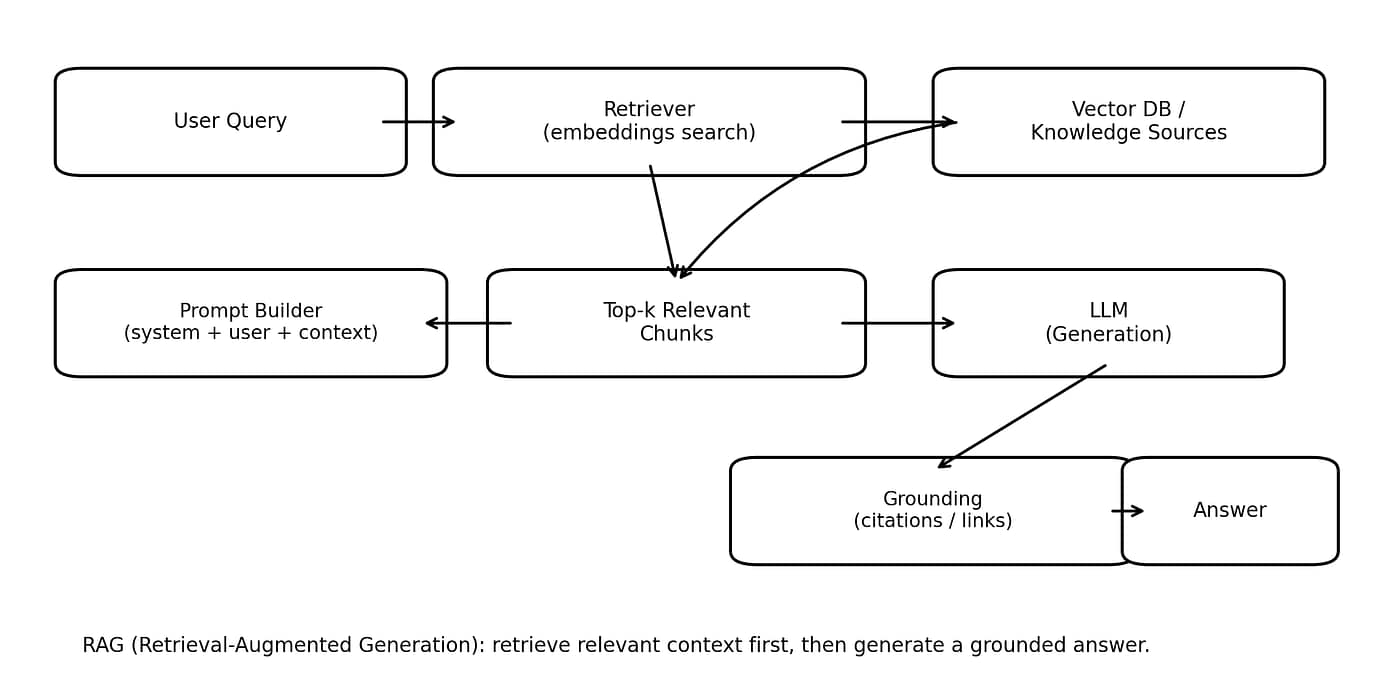

RAG architecture (with diagram)

What’s happening in the diagram

- User Query: question from user

- Retriever: converts query to embedding, searches vector DB

- Vector DB / Knowledge Sources: stores chunks + metadata

- Top-k Chunks: best matching passages returned

- Prompt Builder: assembles system instructions + query + retrieved context

- LLM: generates answer using the context

- Grounding: citations/links to sources used

Data preparation: chunking, metadata, dedup

Most “RAG is bad” stories are really “your indexing pipeline is bad.” In 2025, your indexing quality matters more than your model choice.

Chunking that actually works

- Chunk by meaning (headings/sections), not fixed characters only.

- Add overlap so the retriever doesn’t miss key context at boundaries.

- Keep chunks small enough to be precise, but large enough to be useful.

Metadata is a multiplier

- Source URL / doc ID

- Title + section heading

- Date/version

- Permissions / team visibility

Dedup and normalize

Duplicate PDFs, repeated pages, and near-identical policy versions create noisy retrieval. Dedup before indexing and store versions explicitly.

If you’re building more systems like this, explore related topics in GenAI.

Retrieval: embeddings, hybrid, reranking

Embeddings retrieval (baseline)

This is the default: embed the query, run similarity search, return top-k chunks. It’s fast and works well for many internal knowledge bases.

Hybrid retrieval (often better)

Hybrid retrieval combines keyword search (BM25) + embeddings. This helps with exact terms like error codes, product names, invoice IDs, and legal clauses.

Reranking (big quality jump)

Rerankers re-score the retrieved candidates using a stronger relevance model. In practice, reranking often improves answer quality more than changing the LLM.

Prompt assembly and context control

Prompting for RAG is not “just paste chunks.” You need structure and guardrails.

- Tell the model to use only provided context for factual claims.

- Require citations for key statements.

- If context is insufficient, instruct it to say what’s missing.

- Keep context within budget using top-k limits and chunk filtering.

Evaluation: how to measure RAG quality

If you don’t measure retrieval and answer quality, you’ll tune blindly. In 2025, teams typically track three layers:

- Retrieval quality: did the right chunk appear in the top-k?

- Answer faithfulness: is the answer supported by retrieved text?

- Task success: did the user get what they needed?

A practical evaluation loop

- Collect 50–200 real user questions.

- Label what “good sources” look like (gold documents/chunks).

- Measure retrieval hit-rate (top-3/top-5).

- Spot-check hallucinations and citation accuracy.

- Iterate: chunking → hybrid → reranking → guardrails.

Security and privacy for enterprise RAG

Enterprise RAG fails the moment it leaks internal docs to the wrong user. Permissions have to be enforced in retrieval, not after generation.

- Access control at retrieval: filter chunks by user/team permission.

- PII redaction: remove sensitive identifiers where required.

- Audit logs: store which docs were retrieved for each query.

Common mistakes (and fixes)

Mistake 1: indexing everything without structure

Fix: index with metadata, dedup, and chunk by sections.

Mistake 2: no hybrid search for exact terms

Fix: use hybrid retrieval so keyword precision complements embeddings.

Mistake 3: assuming the LLM will “behave”

Fix: enforce citations and refusal rules when context is missing.

Mistake 4: shipping without evaluation

Fix: build a small eval set and track retrieval + faithfulness over time.

FAQ

What is RAG best used for?

RAG is best for question answering over internal documentation, policy lookup, product support, and any workflow where the answer must be grounded in your private knowledge sources.

Do I need a vector database for RAG?

Not always. For small datasets, you can store embeddings in a traditional database. For larger datasets or higher throughput, a vector database makes retrieval faster and easier to manage.

Does RAG eliminate hallucinations?

No, but it reduces them when retrieval is good and the model is forced to cite sources. Poor chunking or irrelevant retrieval can still produce confident wrong answers.

RAG vs fine-tuning: which should I do first?

Most teams should start with RAG first. Fine-tuning is useful when you need consistent style/format or domain behavior, but it won’t keep facts updated unless your training pipeline is constantly refreshed.

Bottom line

RAG is the practical path to accurate, up-to-date answers on your own data in 2025. If you focus on indexing quality, retrieval strategy, and evaluation, you can get reliable results without massive infrastructure or model training.

Next step: pick one internal dataset, build a small RAG prototype, then measure retrieval hit-rate and citation accuracy before scaling.