Boost AI Success with MLOps and Model Deployment in 2025

MLOps and model deployment are the backbone of modern AI success. Without them, even the smartest models can fail in real-world use. These practices help teams keep models accurate, reliable, and easy to update.

With tools like CI/CD, short for Continuous Integration and Continuous Deployment, you can ship changes faster and more safely. For example, one team using MLOps reduced their model deployment time from several days to just a few hours. That kind of speed is a real advantage in fast-moving markets.

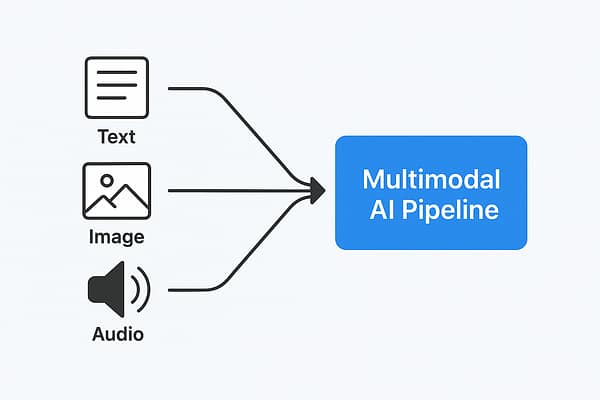

In one of our recent AI projects, we combined text and voice inputs to create smarter onboarding. That work only scaled because we had solid MLOps and model deployment practices in place. This is where MLOps shines. It turns experiments into stable systems.

- 7 Benefits of MLOps and Model Deployment

- MLOps and the Model Lifecycle

- A Practical MLOps Pipeline for 2025

- Real-World Use Cases

- Monitoring and Model Health in Production

- Governance, Security, and Compliance

- Future Trends in MLOps and Model Deployment

- Quick Start Checklist

- Frequently Asked Questions

7 Benefits of MLOps and Model Deployment

MLOps and model deployment help teams handle AI the smart way. Here is what you gain when you treat models like products and not like one-off demos.

- Better results from your models with real-time monitoring

- Faster updates using CI/CD pipelines

- Fewer mistakes because of automation

- Smoother teamwork across data and IT teams

- Clear tracking of each model version

- Quick fixes if something goes wrong

- More time to try new ideas and improvements

Think of MLOps like a high-speed assembly line for AI. Each step is repeatable. Each change is tracked. Each release is safer. That is how you avoid painful surprises after launch.

For more details, see Google’s MLOps guide.

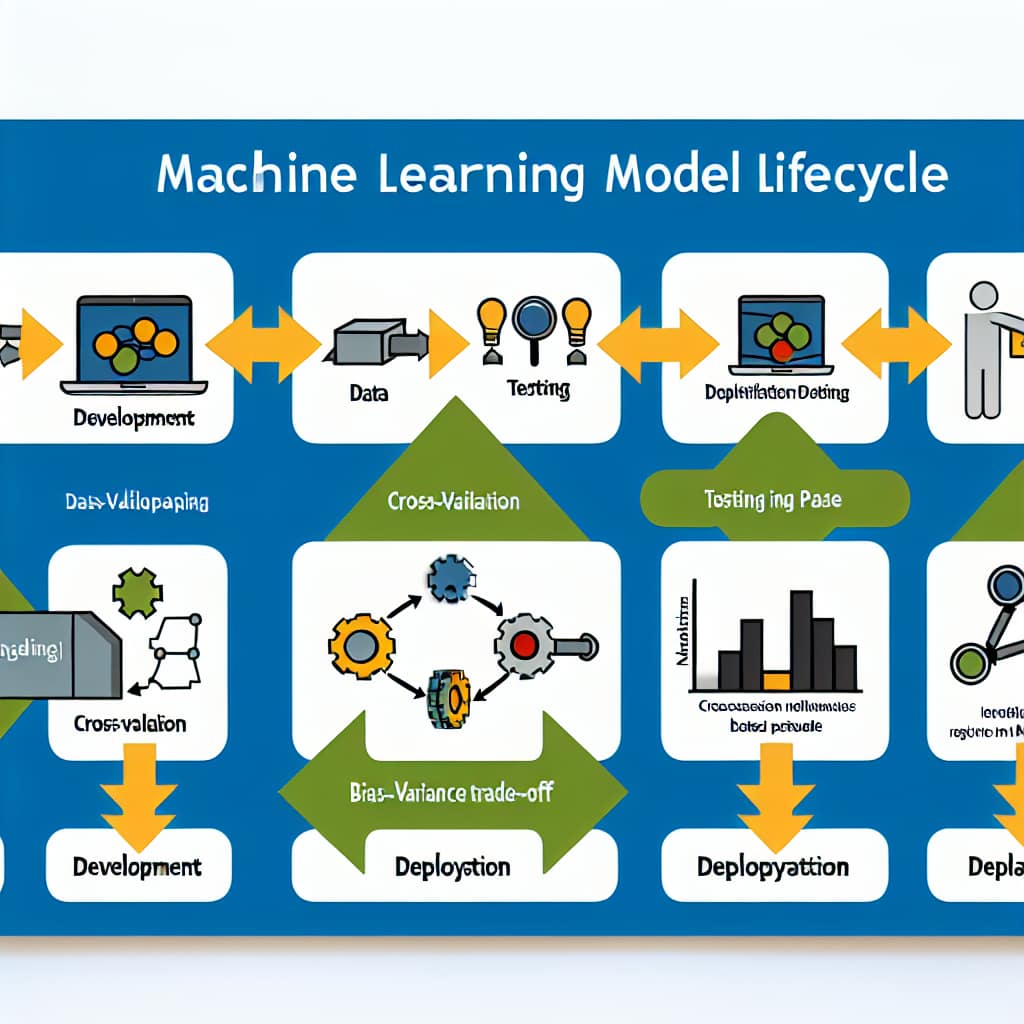

MLOps and the Model Lifecycle

When Rank Math says your content is short, it is usually pointing to depth. A helpful way to add depth is to explain the full lifecycle. MLOps is not only deployment. It covers the entire journey of a model.

A typical model lifecycle in 2025 includes these stages:

- Problem framing: Define the business goal and success metric.

- Data collection: Gather training data and label it where needed.

- Data validation: Check schema, ranges, missing values, and leakage risks.

- Training: Train a baseline model and compare improvements.

- Evaluation: Validate on a holdout set and test for bias and drift risks.

- Packaging: Bundle the model with dependencies and versioned artifacts.

- Deployment: Release with safe rollout methods like canary or blue-green.

- Monitoring: Track quality, drift, latency, cost, and failures.

- Retraining: Update when performance drops or new data arrives.

- Retirement: Decommission models that no longer add value.

Many teams only focus on training and forget the rest. That is why models degrade. Data changes. User behavior shifts. Regulations evolve. If you do not plan for the full lifecycle, your model will drift and your product will suffer.

MLOps gives you repeatability and control across the lifecycle. It also creates shared language between data scientists, engineers, and operations teams. That coordination is often the biggest win.

A Practical MLOps Pipeline for 2025

A good pipeline is simple, not fancy. It helps your team move fast while keeping quality high. Here is a practical MLOps pipeline that fits most teams in 2025.

Step 1: Data checks before training

Bad data creates bad models. Run automated checks before every training job. Check schema, duplicates, missing values, and label quality. Also check for training serving skew. That is when training data differs from real production data.

Step 2: Reproducible training

Training should be reproducible. Track the dataset version, feature code version, hyperparameters, and random seed. Store the trained artifact in a registry. If you cannot reproduce a model, you cannot debug it later.

Step 3: Evaluation gates

Use simple quality gates. If a new model does not beat the baseline on key metrics, it should not ship. Include checks for fairness where relevant. Also include stress tests. For example, test performance on rare classes or edge inputs.

Step 4: Packaging and registry

Package models like software releases. Use a model registry to track versions and metadata. Record what data was used and what evaluation results were achieved. This improves traceability and supports audits.

Step 5: CI/CD for deployment

CI/CD is what turns model changes into safe releases. You can trigger deployments on registry approvals. You can also run automated tests on model endpoints. This reduces manual steps and lowers the chance of mistakes.

Step 6: Safe rollout patterns

Use canary releases when possible. Send a small percent of traffic to the new model. Compare results. If metrics look good, increase traffic. If anything looks risky, roll back quickly. This keeps user impact low.

Step 7: Monitoring and feedback

Monitoring is not optional. Track model outputs, latency, error rates, and drift signals. If you can, collect ground truth labels. That is how you measure real quality over time.

This pipeline does not require a huge platform team. You can start with a few scripts and a registry. Then you can mature it over time. The key is consistent automation and clear ownership.

Real-World Use Cases

MLOps and model deployment are making big impacts in many areas. The use cases below show why lifecycle thinking matters.

- Banking: Fraud detection models need frequent refreshes. Fraud patterns change quickly. Automated retraining and deployment keep detection strong without breaking production services.

- Healthcare: Clinical decision tools must follow strict validation and change control. MLOps provides traceability and safer release workflows.

- Retail: Recommendation systems shift with seasons and trends. MLOps allows rapid experimentation while controlling risk.

- Logistics: Route optimization benefits from near real-time data. Monitoring and quick updates help models adapt to weather, traffic, and supply constraints.

In short, MLOps and model deployment help businesses stay sharp and responsive. They reduce downtime and keep models aligned with reality.

Explore IBM’s MLOps use cases for more examples.

Monitoring and Model Health in Production

Once a model is deployed, the real work begins. Production is where edge cases show up. It is also where data drift happens. Monitoring helps you catch problems early so your AI stays accurate and useful.

Here are the most important monitoring layers for 2025:

- Service health: Latency, error rates, timeouts, and throughput.

- Data drift: Input distributions change over time. Track key features and embeddings.

- Concept drift: The relationship between inputs and labels changes. This is harder to detect, but important.

- Prediction quality: Precision, recall, calibration, and business outcomes when labels are available.

- Bias and fairness: Check subgroup performance when relevant and legally required.

- Cost monitoring: Track compute usage, GPU time, and inference cost per request.

A simple approach is to define alert thresholds. For example, alert when latency rises above a set limit. Alert when drift scores exceed a baseline. Also define what happens next. Who gets paged. What is the rollback plan. What is the retraining trigger.

If you have limited resources, start with three things. Endpoint reliability, latency, and drift. Those cover the most common failures. Then add label-based performance monitoring as you mature.

Governance, Security, and Compliance

MLOps is also about control. In 2025, governance and security are more important than ever. Teams need to know what model is running, who approved it, and what data it was trained on.

Here are practical governance steps that improve safety without slowing teams down:

- Access control: Limit who can train, register, approve, and deploy models.

- Audit logs: Track every deployment, rollback, and registry change.

- Model cards: Store intended use, limitations, and evaluation results.

- Data lineage: Track which datasets and features were used for training.

- Secrets management: Store API keys and tokens securely, not in code.

- Privacy safeguards: Remove sensitive data and follow retention rules.

Compliance requirements vary by industry. Still, the pattern is similar. You need traceability, repeatability, and accountability. MLOps helps you build those habits early.

Future Trends in MLOps and Model Deployment

These practices are growing fast. Here are the top trends to watch in 2025 and beyond.

- AI-based monitoring that warns you before models fail

- More no-code tools that let teams deploy models with fewer manual steps

- Support for multimodal models using voice, text, and images together

- Integration with edge devices for faster AI on the go

- More governance features built into model registries and platforms

Just like smartphones replaced flip phones, these new tools make AI easier and better. They also reduce the gap between research and production.

Visit Microsoft’s MLOps research to learn more.

Getting Started with MLOps

If you are new to this practice, start small. Pick one process, maybe model deployment or monitoring, and improve it. Many teams begin with basic CI/CD pipelines before moving to full automation.

The key is consistency. MLOps is not a one-time fix. It is an ongoing practice that improves as your team learns what works best.

In practice, successful MLOps requires collaboration between data scientists, engineers, and operations teams. Everyone must understand the benefits of streamlined workflows and faster deployment cycles.

Here is a simple checklist to use this week:

- Define a clear model owner for each deployed model

- Create a basic model registry with versions and metadata

- Add data validation checks before training

- Set evaluation gates that block low-quality releases

- Deploy with a safe rollout strategy like canary

- Add monitoring for latency, errors, and drift

- Create a rollback plan and test it once

Frequently Asked Questions

What is MLOps?

MLOps combines machine learning with IT and development work to manage models across training, deployment, monitoring, and updates.

How does CI/CD benefit AI projects?

CI/CD helps teams ship model updates faster with fewer errors. It automates tests, approvals, and releases so deployments are safer.

Why is model monitoring important?

Monitoring helps you catch drift, failures, and performance drops early. That keeps your AI accurate and reliable in production.

What are the common challenges in MLOps?

Common challenges include data quality, versioning, drift, evaluation gaps, and coordination across teams. Strong automation reduces these issues.

How does automation affect MLOps?

Automation saves time, cuts mistakes, and improves consistency. It also makes model updates repeatable and easier to audit.

Conclusion

MLOps and model deployment make AI faster, stronger, and easier to manage. They turn your ideas into real-world results. They also reduce risk by making releases predictable and measurable.

To keep improving, explore more tips in our archive: Read more in our GenAI archive.

Author Bio: Sudhir Dubey is an AI researcher and data science educator focused on practical AI deployment and fine-tuning strategies for enterprise use cases.