Advanced MLOps: Essential Strategies for Efficient Model Deployment in 2025

Table of Contents

Introduction to MLOps

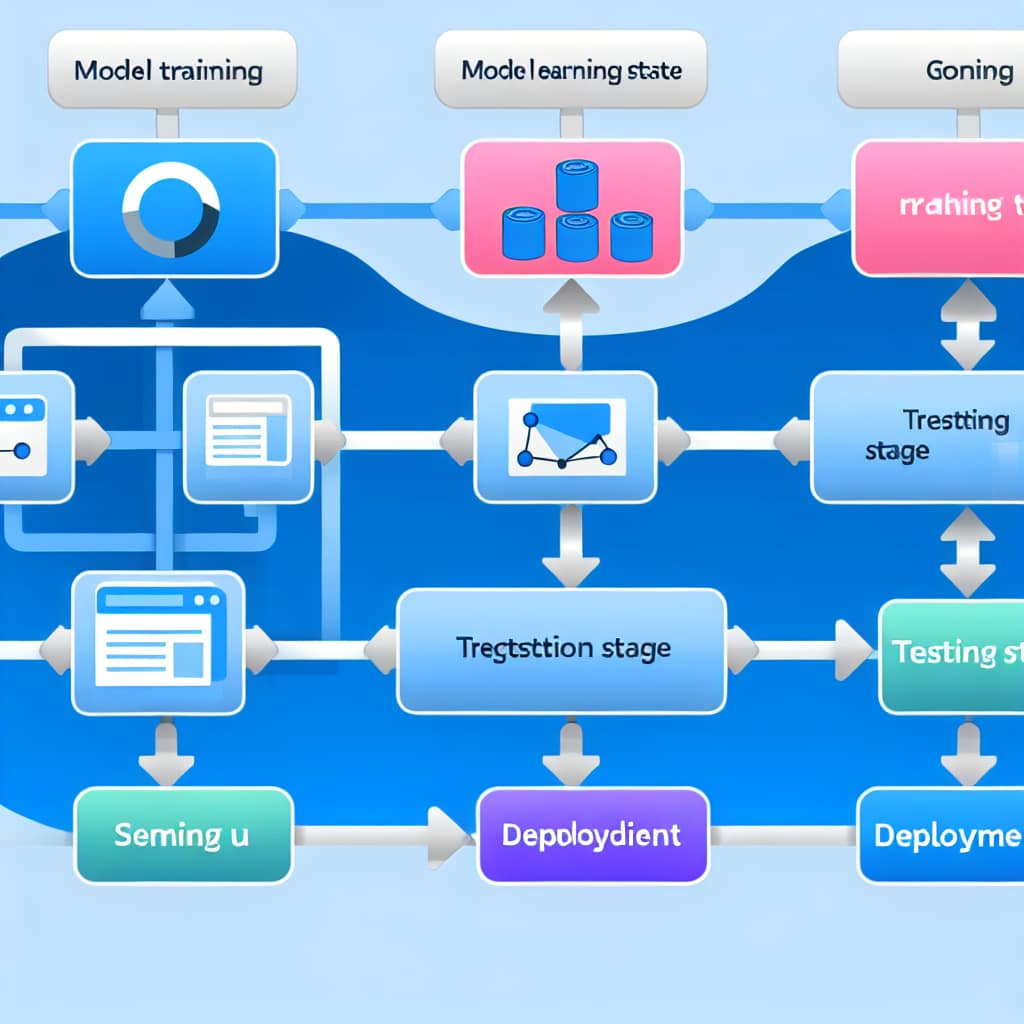

MLOps, or Machine Learning Operations, is the discipline focused on streamlining the lifecycle of machine learning models, from development to deployment and monitoring. Inspired by DevOps principles, MLOps tackles the unique challenges related to AI models, including versioning, testing, and scalability. The need for MLOps has grown as organizations seek to integrate machine learning models more efficiently into their production environments. This integration ensures models can be updated, monitored, and validated continuously, reducing downtime and enhancing the accuracy of AI predictions.

Integrating MLOps with DevOps

The convergence of MLOps and DevOps represents the future of AI model deployment. By aligning these methodologies, businesses can ensure that machine learning models are deployed with the same robustness as traditional software applications. The key to this integration lies in shared practices such as continuous integration and continuous deployment (CI/CD), which facilitate rapid iterations and updates. For instance, leveraging CI/CD pipelines allows for the automated testing and validation of AI models, significantly reducing the time required to move from development to production.

Implementing such practices helps in maintaining a seamless flow from data acquisition to model training and deployment. Tools like Jenkins and GitLab, traditionally used in DevOps, are now being adapted to support the unique requirements of machine learning workflows.

Advanced Model Deployment Techniques

As AI models increase in complexity, the techniques used to deploy them must also evolve. One of the cutting-edge methods gaining traction is the use of containerization through technologies like Docker and Kubernetes. Containers encapsulate AI models, ensuring consistent environments across development, testing, and production. This approach not only simplifies deployment workflows but also enhances scalability.

Another technique that stands out is the implementation of serverless architectures. By outsourcing infrastructure management, serverless frameworks allow data scientists to focus on model optimization, thus accelerating the deployment process. For example, platforms like AWS Lambda and Google Cloud Functions enable automated scaling in response to user demand, optimizing resource utilization.

Emerging Trends and Technologies

The landscape of MLOps is continually shaped by technological advances and evolving business requirements. One notable trend is the rise of explainable AI (XAI), which addresses the challenge of understanding and interpreting machine learning model decisions. Tools like LIME and SHAP are gaining popularity among data scientists who need to ensure model transparency and trust.

Additionally, the integration of AI and edge computing is opening up new horizons for real-time data processing. By deploying models at the edge, organizations can achieve faster data insights and better resource efficiency. This trend is particularly evident in industries such as manufacturing and healthcare, where immediate data feedback is crucial.

Real-world Case Studies

Several organizations have successfully harnessed MLOps to enhance their AI model deployment strategies. For instance, a leading retail chain implemented MLOps to manage its demand forecasting models. By automating deployment and monitoring processes, the company reduced forecast errors by 20% and increased inventory turnover.

Another example is a financial institution that integrated MLOps for its fraud detection models. Continuous model updates allowed the institution to respond quickly to evolving fraud patterns, ultimately saving millions in potential losses.

FAQ

What is the main difference between MLOps and DevOps?

MLOps focuses specifically on the lifecycle management of machine learning models, addressing challenges unique to AI systems, while DevOps centers on software application development and deployment practices.

How does containerization benefit MLOps?

Containerization ensures consistent environments, simplifies deployments, and enhances scalability, all crucial for efficient AI model management and deployment.

What are the challenges of implementing MLOps?

Challenges include integrating with existing IT infrastructure, ensuring data security, and managing the complexity of AI models across different environments.

Why is explainable AI important for MLOps?

Explainable AI enhances model transparency, fostering trust and compliance, particularly in regulated industries where understanding model decisions is crucial.

Conclusion

The integration of MLOps with advanced model deployment strategies is revolutionizing the way organizations harness AI. By adopting these practices, businesses can ensure efficient, scalable, and reliable AI implementations, significantly enhancing their operational efficiency and decision-making capabilities. As we move further into the AI-driven era, staying abreast of these trends will be imperative for professionals and organizations alike.

For further insights into AI models and deployment strategies, read more on our blog. To remain updated on AI trends, subscribe to our newsletter for the latest developments.